Transient epileptic amnesia (TEA) is a rare but probably underdiagnosed neurological condition which manifests as relatively brief and generally recurring episodes of amnesia caused by underlying temporal lobe epilepsy.[1] Though descriptions of the condition are based on fewer than 100 cases published in the medical literature,[2] and the largest single study to date included 50 people with TEA,[3][4] TEA offers considerable theoretical significance as competing theories of human memory attempt to reconcile its implications.[5]

The desire to improve our cognitive ability through brain-training games has turned into what is said to be a trillion-dollar industry. Such games are based on the idea that testing our memory, attention and other types of brain processing will improve our overall intelligence and brain function.

Companies like Lumosity, Cogmed and Nintendo are all cashing in hugely on this idea. But many scientists and experts in brain research feel the theory has serious flaws. There has yet to be concrete evidence proving anything close to what such companies claim to be able to do.

In fact, David Z. Hambrick, associate professor of psychology at Michigan State University, and his colleagues Thomas S. Redick (lead researcher) and Randall W. Engle will soon be publishing new evidence that fails to replicate the very study that so much of the commercial industry rests upon.

We spoke with Hambrick about the limits of intelligence and the findings from this new and potentially ground-breaking study.

SmartPlanet: What are these brain games actually training or improving?

David Z. Hambrick: The so-called brain games are essentially tasks that require the player to remember information, to attend to information and make judgments, and to comprehend texts or imagine how an object might look in different orientations.

SP: What would be one test used by one of these brain-game companies, like Lumosity?

DZH: One is called a dual n-back test. Users have to monitor two streams of information, one visual and one auditory. And each time one or both of these streams emits some kind of an established target you press a key. So it’s a divided-attention task: You have to split your attention between two channels of input.

SP: How is that supposed to be improving our intelligence?

DZH: Great question. It’s designed to improve working memory. You can think of working memory as your mental workspace for concurrently sorting and processing information. One of the core capabilities of working memory is the ability to control attention. Lumosity is marketing this test to increase intelligence because it is designed to tap into this ability to control attention. Their idea is that if we can improve the ability to control attention then we can, by extension, improve people’s intelligence.

SP: What was the trigger that launched this huge industry trend of brain games?

DZH: Psychologists have been interested in the idea of improving intelligence for over a century. But until the mid-2000s, people were not very optimistic about this whole enterprise.

However, there was a specific study published in 2008 in the Proceedings of the National Academy of Sciences by Susanne Jaeggi and Martin Buschkuehl that renewed interest in this topic.

SP: Could you describe that study?

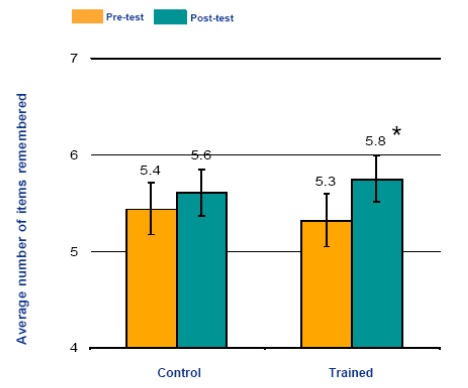

DZH: Sure. They had subjects complete a test to measure reasoning ability. Subjects watched patterns that change across rows or down columns, and made an inference about how those patterns change. Then some of the subjects received the dual n-back training. One group received eight sessions of training, another group received 12 sessions of training, a third group 17 sessions, and a fourth group 19 sessions of training.

Some of the subjects were assigned to a control group, meaning they didn’t receive any training after taking the reasoning test. Then everybody returned for a second administration of the test.

They found that the training subjects showed a bigger gain in reasoning test scores than the control subjects. And they also found that the training groups that received more hours of training showed a bigger gain in reasoning test scores.

They explicitly claimed that this was an increase in intelligence, and not merely in performance on a single test of reasoning. In particular an increase in what we call fluid intelligence.

SP: What is fluid intelligence?

DZH: Fluid intelligence is the ability to solve novel problems and adapt to new situations, as opposed to crystallized intelligence, which is acculturated learning, so for example knowing the meaning of the word “concur”, or knowing what the Koran is.

It has been thought that fluid intelligence is pretty much fixed, and that it is impervious to efforts to improve it through training. So the finding in the Jaeggi study that fluid intelligence can be improved created a big stir.

SP: But you claim the study has major flaws?

DZH: If you find that people get better in one test of reasoning it doesn’t mean necessarily that they’re smart, it means that they’re better on one test of reasoning. You can’t measure fluid intelligence with any single test, it’s measured with multiple tests.

SP: And the other flaw?

DZH: There were some pretty striking differences between the control group and the training groups. The control group who received no training, went home and did whatever. But the training groups, on the other hand, came in regularly for training. This raises possibility of motivation being an explanation: They wanted to do well in the experiment.

Another important point is that there were procedural differences across these training groups that really complicate interpretation of the results, and in particular the claim that more training equals more gain. These procedural differences were not reported in the Jaeggi article. We found out about them in Jaeggi’s unpublished dissertation, and through follow-up emails to Jaeggi.

SP: You worked with lead researcher Tom Redick to attempt to replicate the findings from the Jaeggi study. And this research is about to be published. Can you give us a preview of what you found?

DZH: So, we set out to replicate the findings, correcting all of these problems. We had subjects complete not one but eight tests of fluid intelligence. We then assigned them to a training group in which they received 20 sessions of training in Jaeggi’s dual n-back task or to one of two control conditions. The “no-contact” control condition was the same as Jaeggi’s control condition. By contrast, in the “active-control” condition, subjects were trained in a task that we designed to be as demanding as dual n-back without tapping working memory capacity. Finally, we had all subjects perform different versions of the eight intelligence tests half way through training and at the end.

And what did we find? Zip. There wasn’t much more than a hint of the pattern of results that Jaeggi reported in any of the eight intelligence tests, and nothing in the predicted direction that even approached statistical significance. If you someone were to ask me to estimate how much 20 sessions of training in dual n-back tasks improves fluid intelligence, I’d say zero.

SP: How would you define intelligence?

DZH: At a conceptual level intelligence is the ability to learn, to profit from experience, the ability to adapt to new situations, and the ability to solve problems. At a technical level I define intelligence as the variance that’s common across a set of tests of cognitive ability.

SP: Explain that last part for us.

DZH: If you give a large sample of people a battery of cognitive tests, spatial ability, verbal ability, mathematical ability and so on, it turns out that someone who does well on one test is going to tend to well on all the others. This common factor we call “psychometric G”, or “g” for general.

SP: How do we currently measure intelligence?

DZH: We measure intelligence with tests that are designed to tap into cognitive processes like retrieving information from memory, manipulating mental visual images, and making rapid judgments about stimuli, as well as tests that require analytical reasoning where you have to make deductions of inferences.

SP: Presumably this is involved in IQ testing. What does IQ really mean?

DZH: IQ is an index of that general factor. In a standardized IQ test people take a bunch of sub-tests. They take tests of comprehension, and special reasoning, etc. IQ is a summary measure that reflects performance across all of those things.

But there is intense speculation still about what IQ really is. It could reflect the sort of the efficiencies and the processes involving working memory and attention. It could reflect strategies for processing information and solving problems. It certainly reflects brain function. But this is the million-dollar question for intelligence researchers: What exactly is intelligence?

SP: Because it’s a value that society deems important, and it has very real implications, right?

DZH: Well one thing we know for sure is that it’s practically useful. IQ, despite what people will say about being a meaningless number, predicts a lot of things. It predicts job performance better than any single variable that we know of. It predicts educational attainment, it predicts income, it predicts health, it predicts longevity even after you take into account sort of obvious confounding factors like socio-economic status. So it captures something important and something useful. If we were to no longer use IQ tests, for things like personnel tests of college admissions, it would cost society billions. IQ is the single best predictor of a lot of outcomes that we value in society.

SP: Is there any proven way to improve our intelligence?

DZH: There’s been all this focus on brain training and cognitive training. But there is not convincing evidence to support the claim by Lumosity and other companies that these programs have far-reaching beneficial effects on cognitive functioning. However, there is actually some evidence that physical exercise, ironically, does improve brain function. And there is something else we can do.

SP: What?

DZH: I talked about this distinction between fluid intelligence and crystallized intelligence. Fluid intelligence is the ability to solve novel problems and crystallized intelligence is your knowledge acquired through experience.

Fluid intelligence is hard to improve. It’s not readily modifiable like weight. But crystallized intelligence can be improved. You can increase crystallized intelligence through reading. You learn. And this is a good thing to do. We want people to go into the voting booth with enough knowledge to make an informed decision about whom to vote for. And it can come from acquiring crystallized intelligence about the world that might be relevant to making good decisions.

- For more on how to improve your brain see SmartPlanet’s The Key to a Better Brain is Exercise